Mastering Art Style Consistency with Z-Image: Create Cohesive Visual Series in 2026

Description: Struggling to maintain the same art style across multiple AI-generated images? Learn proven techniques to achieve perfect style consistency using Z-Image, from prompt engineering to advanced LoRA training. Create cohesive series for comics, brands, and game assets.

Introduction: The Style Consistency Challenge

You've experienced it: you generate a stunning image in Z-Image with perfect lighting, color grading, and artistic flair. Excited, you prompt for the next image in your series—only to find the style has drifted. The shadows are deeper, the colors are muted, or the entire aesthetic has shifted to something completely different.

This isn't just frustrating—it's a dealbreaker for professional creative work. Style consistency is critical for:

- Brand campaigns where every asset must feel like part of the same family

- Comic books and graphic novels requiring uniform visual language across panels

- Game development where UI, characters, and environments must share cohesive aesthetics

- Social media series building recognizable visual identities

- Product photography needing consistent look across hundreds of SKUs

The gap between generating one great image and maintaining a style across dozens is massive. Most creators give up, accepting inconsistency as the cost of using AI. But 2026 has brought new solutions—Z-Image offers powerful tools specifically designed to solve the style consistency problem.

In this guide, you'll learn battle-tested workflows to maintain art style consistency across any series, from simple prompt templates to advanced LoRA training. Let's dive in.

Understanding Style vs. Character Consistency

Before we dive into solutions, let's clarify what we're solving for. Two distinct consistency challenges exist in AI image generation:

Character Consistency: Keeping the same person/subject across images

- Same face, body, clothing

- Different poses, scenes, expressions

- Example: A superhero appearing in 20 comic panels

Style Consistency: Maintaining the same artistic look across images

- Same lighting, color palette, rendering technique

- Different subjects, compositions, scenes

- Example: 10 different product shots sharing identical aesthetic

This guide focuses on style consistency—creating visual cohesion across varied subjects. If you need character consistency techniques, check out our comprehensive guide on character consistency or identity lock methods.

Why does style drift happen in the first place?

The Root Causes of Style Drift

1. Prompt Inconsistency

Even small wording changes trigger different interpretations:

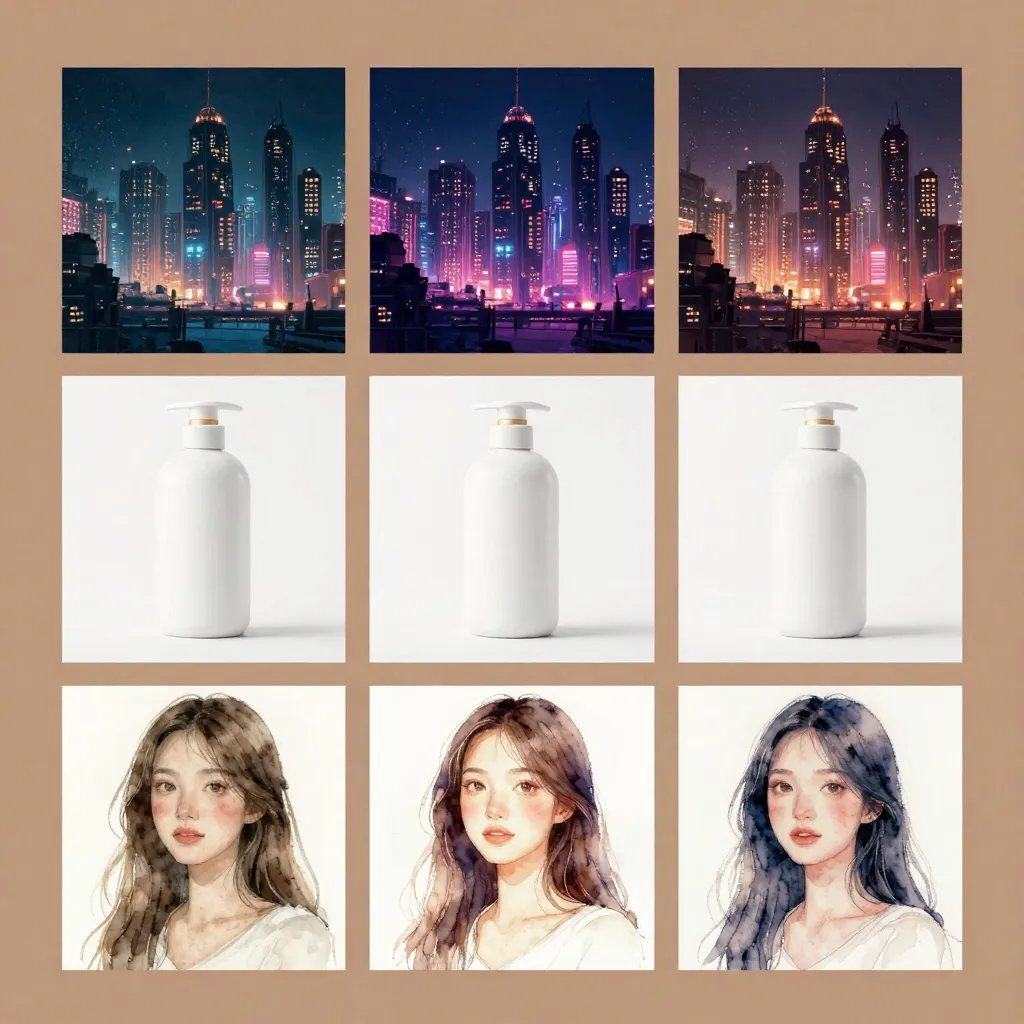

- Image 1: "cyberpunk city, neon lights, dramatic lighting"

- Image 2: "futuristic city, bright lights, cinematic lighting"

- Result: Similar concepts, different aesthetic execution

2. Random Seed Variations

AI generation starts from random noise. Different seeds = different stylistic decisions, even with identical prompts.

3. Model Interpretation Drift

Z-Image (like all diffusion models) makes probabilistic choices. Over multiple generations, small variations compound into noticeable style shifts.

4. Parameter Inconsistency

Varying CFG scale, sampling steps, or resolution between generations creates subconscious style differences.

Now that we understand the problem, let's solve it with proven workflows.

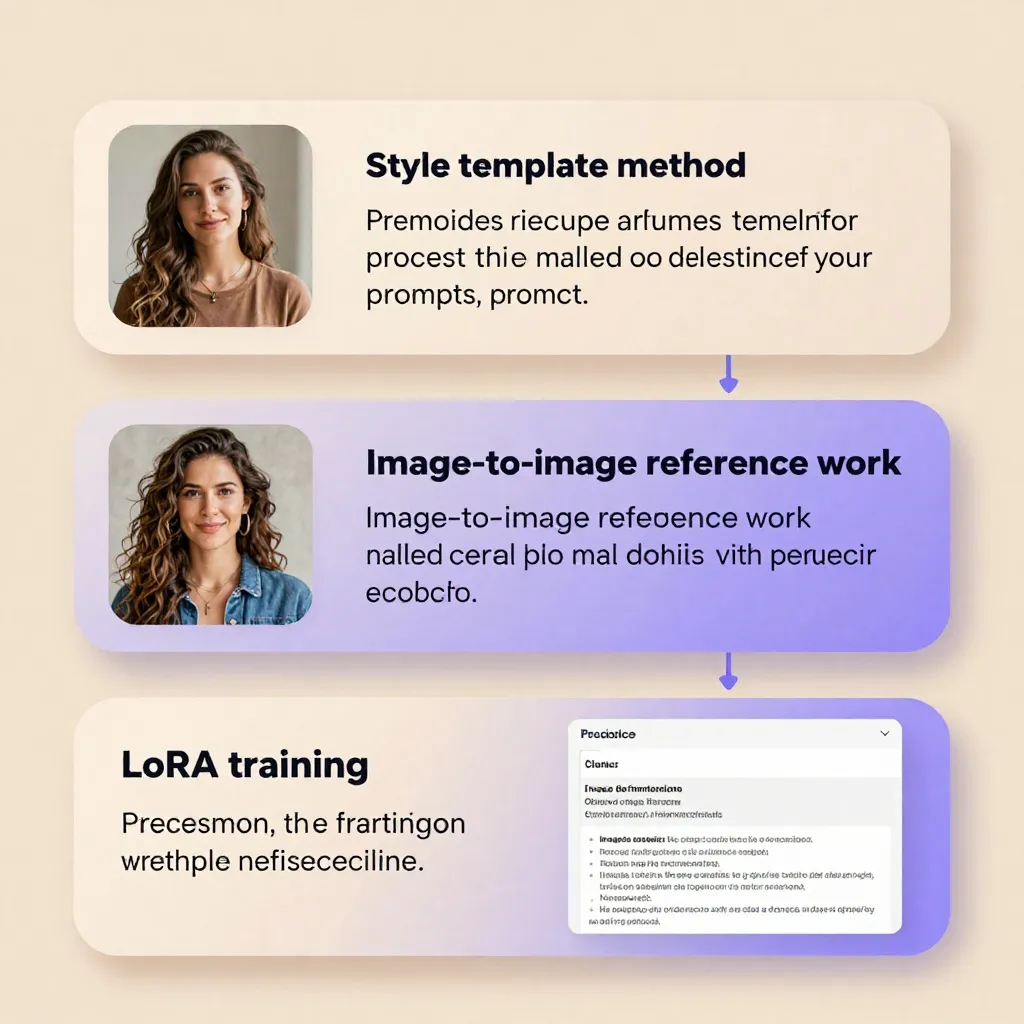

Core Technique 1: The Style Template Method

The simplest path to style consistency is treating style like a formula—specifying it explicitly and reusing it unchanged.

Building Your Style Template

Create a dedicated style template that you'll append to every prompt:

[STYLE TEMPLATE]

Artistic Style: [e.g., "cyberpunk concept art", "watercolor illustration", "photorealistic product photography"]

Color Palette: [e.g., "neon cyan and magenta with deep shadows", "warm earth tones", "black and white with high contrast"]

Lighting: [e.g., "dramatic rim lighting", "soft diffused daylight", "harsh studio strobes"]

Rendering: [e.g., "digital painting with visible brushstrokes", "crisp vector lines", "film photography with grain"]

Technical: [e.g., "8K resolution, highly detailed", "lo-fi aesthetic with texture", "minimalist flat design"]

Camera: [e.g., "wide angle lens, deep depth of field", "85mm portrait lens, bokeh background"]

Example Template for Brand Series:

[STYLE TEMPLATE]

Artistic Style: Modern minimalist product photography

Color Palette: Clean white background with subtle warm gray gradients, accent color in coral red

Lighting: Soft three-point studio lighting with gentle shadows

Rendering: Sharp focus, commercial photography quality, 4K resolution

Technical: Professional product photography, seamless backdrop

Camera: 100mm macro lens, f/8 aperture, front-on composition

Usage:

- Image 1: "Wireless headphones on white background [STYLE TEMPLATE]"

- Image 2: "Smartwatch charging on white background [STYLE TEMPLATE]"

- Image 3: "Bluetooth speaker on white background [STYLE TEMPLATE]"

Pro Tips for Template Success

-

Lock the template: Never modify your style template between generations. If you need to adjust the style, create a new template for a new series.

-

Order matters: Place your style template at the end of your prompt for maximum influence over the output.

-

Test thoroughly: Generate 10+ test images with your template before committing to a series. If you see drift, refine the template with more specific descriptors.

-

Document everything: Save your successful templates in a reference document. Over time, you'll build a library of reliable styles.

The template method works beautifully for straightforward projects, but it has limitations—it can't capture nuanced artistic styles that are difficult to describe in words. That's where reference images come in.

Core Technique 2: Image-to-Image Style Locking

When words fail to capture a style perfectly, use Z-Image's image-to-image (img2img) capabilities to lock your aesthetic visually.

How Img2Img Style Locking Works

Instead of generating from scratch, you upload a reference image that embodies your target style. Z-Image then preserves the visual qualities of that image while generating new content based on your prompt.

Step-by-Step Workflow:

-

Create your master style reference

- Generate or find an image that perfectly captures your desired aesthetic

- This image should have the right lighting, color, rendering technique—everything you want to replicate

- Save this image as your "style anchor"

-

Access Z-Image's img2img feature

- Navigate to the Z-Image Turbo image-to-image interface

- Upload your master style reference

-

Configure denoising strength

- Low strength (0.2-0.4): Preserves most of the reference image, only subtle changes

- Medium strength (0.5-0.6): Balance between preserving style and allowing new content

- High strength (0.7-0.9): Major changes to composition/subject while maintaining stylistic qualities

-

Generate your series

- Use the same reference image for all generations

- Vary your content prompt (different subjects, scenes, actions)

- Keep denoising strength consistent across the series

Practical Example:

Master Reference: A photorealistic portrait of a woman with warm golden lighting, shallow depth of field, film grain texture.

Series Prompts:

- "Man in business suit, same lighting and photography style"

- "Child playing in park, same lighting and photography style"

- "Elderly couple holding hands, same lighting and photography style"

All three images will share the warm golden lighting, film grain, and shallow depth of field—even though subjects differ completely.

Advanced Img2Img Strategies

Multiple Reference Blending:

Upload 2-3 reference images sharing similar qualities. Z-Image will average their styles, often producing more consistent results than single references.

Example: Upload 3 different cyberpunk cityscapes with neon lighting. Use them collectively to generate cyberpunk character portraits, vehicles, and weapons—all sharing the same neon aesthetic.

Style Transfer Workflow:

- Generate an image with basic content you like

- Use that image as input for img2img with a style reference

- Result: Your content, transformed into your reference style

This is particularly powerful for product photography and virtual staging, where you need consistent style across varied subjects.

Core Technique 3: Prompt Engineering for Style

Precision in your language directly translates to precision in your style. These prompt engineering techniques minimize drift through clear communication.

Technique 3.1: Style Anchoring

Style anchors are specific, unchangeable descriptors that appear in every prompt. They act as your "style DNA."

Build Your Style Anchors:

-

Identify core style elements from your reference or vision:

- Color: "rich jewel tones with emerald green and ruby red accents"

- Lighting: "dramatic chiaroscuro lighting with strong shadows"

- Texture: "visible oil paint brushstrokes on canvas texture"

- Mood: "melancholic and atmospheric"

-

Create an anchor phrase combining these elements:

[STYLE ANCHOR] Rich jewel tones with emerald green and ruby red accents, dramatic chiariscuro lighting with strong shadows, visible oil paint brushstrokes on canvas texture, melancholic and atmospheric mood -

Append anchor to EVERY prompt in your series:

- "Woman reading in library [STYLE ANCHOR]"

- "Man walking through forest [STYLE ANCHOR]"

- "Child playing with toy [STYLE ANCHOR]"

Why this works: By repeating identical style descriptors, you force the model to prioritize those qualities over random variation.

Technique 3.2: Negative Style Prompting

Tell Z-Image what NOT to do—preventing unwanted style elements from creeping in.

Example Negative Prompts:

--no "bright colors, flat lighting, cartoon style, low resolution, blurry, oversaturated"

Use negative prompts when you notice consistent problems in your outputs. If images are consistently too bright, add "--no bright lighting" to your workflow.

Technique 3.3: Parameter Locking

Technical parameters profoundly affect style. Lock them for consistency:

Locked Settings for Style Consistency:

| Parameter | Setting | Purpose |

|---|---|---|

| CFG Scale | Fixed value (e.g., 7.5) | Controls how strongly the prompt influences output |

| Sampling Steps | Fixed value (e.g., 30) | Affects refinement and detail level |

| Seed | Same seed, or random with consistent prompts | Identical seed = identical style foundation |

| Resolution | Fixed aspect ratio and size | Prevents composition/style shifts from format changes |

| Denoising Strength | Fixed value for img2img | Ensures consistent style adherence |

Document these settings in your project files. Randomly tweaking parameters between generations is a guaranteed path to style drift.

Advanced Technique 4: LoRA Training for Perfect Style Consistency

For ultimate style control—especially for long-term projects or brand work—train a custom LoRA model that learns your exact aesthetic.

What is a LoRA?

A LoRA (Low-Rank Adaptation) is a small, trainable model adapter that "teaches" a base model new concepts. You can train a LoRA on:

- Artistic styles (e.g., your brand's unique illustration aesthetic)

- Textures (e.g., specific rendering techniques like crosshatching or watercolor)

- Color palettes (e.g., your brand's signature color schemes)

- Lighting setups (e.g., specific studio lighting configurations)

When to Use LoRA Training

LoRA training is worth the effort when:

- You need hundreds of images in the same style

- Your style is difficult to describe in words

- You're working on brand assets requiring perfect consistency

- You've tried template/img2img methods but still see drift

- You want to reduce prompt complexity (a trained style needs fewer descriptors)

Step-by-Step LoRA Training Workflow

Phase 1: Prepare Your Training Data

-

Collect 15-30 images that embody your target style

- Images should vary in subject but share identical aesthetic qualities

- Example: For a "vintage comic book" style LoRA, collect diverse panels (portraits, action scenes, backgrounds) all in that style

-

Ensure image quality

- High resolution (minimum 1024x1024)

- Consistent aesthetic across all training images

- No watermarks or text overlay (unless part of the style)

Phase 2: Train Your LoRA

Using WaveSpeed's LoRA training or similar platforms:

-

Upload your training dataset

-

Select Z-Image as your base model

-

Set training parameters:

- Learning rate: Start with default (typically 0.0001-0.0004)

- Epochs: 10-20 is sufficient for style LoRAs

- Batch size: Use platform defaults

-

Trigger training (typically takes 15-30 minutes)

Phase 3: Test and Refine

-

Generate test images using your LoRA trigger word

- Simple test: "A portrait in the style of [YOUR_TRIGGER]"

-

Evaluate consistency

- Generate 10+ test images with different subjects

- Check if style remains consistent across varied content

-

Refine if needed

- If style is too weak: Train more epochs or increase learning rate

- If style is too strong/overwhelming: Decrease LoRA strength (use 0.6-0.8 instead of 1.0)

- If style is inconsistent: Add more varied training images

Phase 4: Deploy in Production

Once trained, use your LoRA by including the trigger word in prompts:

A futuristic cityscape, cyberpunk style, in the style of [YOUR_TRIGGER]

A character portrait, cyberpunk style, in the style of [YOUR_TRIGGER]

A weapon design, cyberpunk style, in the style of [YOUR_TRIGGER]

All three will share your trained aesthetic—with minimal additional prompting needed.

LoRA vs. Other Methods: Comparison

| Method | Setup Time | Consistency Level | Flexibility | Best For |

|---|---|---|---|---|

| Style Templates | 5-10 minutes | Medium | High | Quick projects, varied subjects |

| Img2Img | 2-5 minutes | High | Medium | Visual style transfer, iterative refinement |

| LoRA Training | 30-60 minutes | Very High | Low | Long-term projects, brand work, large series |

Z-Image Specific Workflows for Style Consistency

Z-Image offers unique features that make it particularly powerful for maintaining style consistency.

Workflow 1: Turbo Img2Img Pipeline

Z-Image Turbo's speed enables rapid iteration—critical for finding and locking your style.

Rapid Style Discovery Process:

- Generate 10-20 style variations using different prompts

- Identify the strongest stylistic direction

- Use that image as img2img reference

- Generate 10 more variations with that reference

- Select best result as new reference

- Repeat until style is locked

This iterative refinement process leverages Z-Image Turbo's speed (2-4 second generations) to achieve in minutes what would take hours with slower models.

Workflow 2: Cross-Model Style Consistency

For creators who work across multiple tools, Z-Image's compatibility with other models enables hybrid workflows:

Hybrid Style Workflow:

- Generate base style in Z-Image (consistent, reliable)

- Transfer to Midjourney using

--sref(style reference) for specific stylistic variations - Return to Z-Image for final consistency checks and refinements

This approach combines Z-Image's reliability with Midjourney's artistic strengths.

Workflow 3: Batch Generation with Style Lock

Z-Image Turbo supports batch generation—generate multiple images simultaneously with identical parameters.

Batch Style Workflow:

-

Prepare your content prompts:

- "A medieval warrior standing in castle [STYLE ANCHOR]"

- "A medieval warrior riding horse [STYLE ANCHOR]"

- "A medieval warrior fighting dragon [STYLE ANCHOR]"

-

Generate as a batch (Z-Image applies identical random seed/steps to all)

-

Review results together—style differences are immediately visible

-

Regenerate entire batch if style drifts, ensuring perfect consistency

Batch generation is particularly powerful for game asset creation and isometric graphics, where dozens of assets must share identical aesthetics.

Real-World Applications: Industry-Specific Workflows

Comic Books and Graphic Novels

Comics demand extreme style consistency across hundreds of panels.

Comic Style Workflow:

-

Create a style bible with reference images showing:

- Line weight and style

- Shading technique

- Color palette

- Speech bubble design

- Panel layout patterns

-

Train a style LoRA on 20-30 pages of artwork in your target style

-

Generate panel-by-panel using:

- LoRA trigger for base style

- Img2img with previous panel as reference (for temporal consistency)

- Locked technical parameters (resolution, sampling)

-

Quality control checklist for each panel:

- ✓ Line weight matches style bible

- ✓ Shading technique consistent

- ✓ Colors within palette

- ✓ Perspective matches previous panel

For manga specifically, our manga creation guide covers anime-style consistency workflows.

Brand Campaigns and Product Photography

Brands require pixel-perfect consistency across hundreds of assets.

Brand Style Workflow:

-

Define brand visual language:

- Color palette (exact hex codes)

- Lighting setup (studio diagram)

- Background style

- Photography perspective

- Post-processing style

-

Create master reference images:

- Hero product shot in target style

- Lifestyle shot in target style

- Detail/macro shot in target style

-

Generate series using img2img with appropriate master reference:

- Product on white background → Use "hero product" reference

- Product in use scene → Use "lifestyle" reference

- Product detail shots → Use "macro" reference

-

Batch processing for consistency:

- Generate all product shots in single batch

- Generate all lifestyle shots in single batch

- Technical parameters locked across batches

This workflow is extensively covered in our product photography guide.

Social Media Content Series

Influencers and content creators need consistent style for recognizable feeds.

Social Media Workflow:

-

Identify your aesthetic (e.g., "warm golden hour selfies with bokeh backgrounds")

-

Create style template:

[INSTAGRAM STYLE] Golden hour lighting, warm color temperature, shallow depth of field with bokeh backgrounds, iPhone selfie aesthetic, candid natural expression, soft skin texture -

Generate varied content with same style template:

- "Portrait at beach [INSTAGRAM STYLE]"

- "Portrait holding coffee [INSTAGRAM STYLE]"

- "Portrait with pet [INSTAGRAM STYLE]"

-

Maintain consistency by:

- Never changing style template mid-series

- Using identical technical settings

- Posting in consistent sequence (same aesthetic every day)

For content creators, our guide on YouTube thumbnails applies similar consistency principles to video content.

Game Development

Indie game developers need consistent style across UI, characters, environments, and marketing.

Game Asset Workflow:

-

Define art direction document:

- Reference art for each asset category

- Color palette for each environment

- Lighting setup for each scene type

- Perspective rules (isometric vs. top-down vs. side-scrolling)

-

Train category-specific LoRAs:

- "UI_elements" LoRA for menus and icons

- "Character_portraits" LoRA for avatars

- "Environment_forest" LoRA for outdoor scenes

- "Environment_dungeon" LoRA for indoor scenes

-

Generate assets by category using appropriate LoRA:

- All UI elements using "UI_elements" LoRA

- All character art using "Character_portraits" LoRA

- All forest backgrounds using "Environment_forest" LoRA

-

Quality control:

- Compare assets side-by-side

- Check for palette consistency

- Verify perspective alignment

- Test in-game context

This approach enables solo developers or small teams to compete with studios in visual cohesion.

Advanced Topics: Pushing Style Consistency Further

Hybrid Style Blending

Combine multiple style references for unique aesthetics:

Technique: Upload two style reference images with different aesthetics (e.g., watercolor painting + neon cyberpunk). Use both simultaneously in img2img.

Result: A unique hybrid style blending watercolor textures with neon lighting—perfect for distinctive brand identities.

Consistent Style Across Different Aspect Ratios

Challenge: Maintaining style while switching between portrait, landscape, and square formats.

Solution:

-

Create master reference in 1:1 square format

-

For different aspect ratios:

- Crop master reference to match target ratio

- Use cropped version as img2img reference

- Alternatively, generate full composition in target ratio with master as style reference

-

Test across all ratios before committing to series

Seasonal Style Variations

For long-term campaigns (e.g., holiday content), maintain brand consistency while adapting for seasons.

Workflow:

-

Create base style LoRA capturing core brand aesthetic

-

Create seasonal modifier LoRAs:

- "winter_holiday" (snow, cool blues, festive lighting)

- "summer_beach" (warm brights, palm motifs)

- "autumn_cozy" (warm oranges, sweater textures)

-

Generate seasonal content using both LoRAs:

Product shot, base brand style, in style of [BASE_LORA] + [WINTER_HOLIDAY_LORA] Product shot, base brand style, in style of [BASE_LORA] + [SUMMER_BEACH_LORA]

Result: Brand consistency maintained while adapting to seasonal contexts.

Common Pitfalls and Troubleshooting

Pitfall 1: Prompt Drift

Problem: Gradually changing prompts over time causes style to shift unnoticed.

Example:

- Day 1: "cyberpunk city with neon lights and dramatic shadows"

- Day 10: "futuristic cityscape with bright lighting"

- Day 20: "sci-fi metropolis"

Each change seems small, but style has completely transformed.

Solution: Create a master prompt template and never deviate. Only change content/subject descriptors—never style descriptors.

Pitfall 2: Parameter Experimentation Mid-Series

Problem: Tweaking CFG scale, sampling steps, or denoising strength "just to see what happens" breaks consistency.

Solution: Lock all technical parameters at series start. Document them clearly. If you must experiment, create a separate test series.

Pitfall 3: Reference Fatigue

Problem: Using the same img2img reference too many times causes the model to overfit to that specific composition, limiting creativity.

Solution: Rotate between 3-5 style references that share identical aesthetic qualities but different compositions. This maintains style while allowing creative variety.

Pitfall 4: Over-Reliance on LoRA

Problem: Training a LoRA too aggressively makes style inflexible—everything looks identical regardless of prompt intent.

Solution:

- Use moderate LoRA strength (0.7-0.9 instead of 1.0)

- Combine LoRA with style templates for additional control

- Retrain if style becomes too restrictive

Pitfall 5: Ignoring Post-Processing

Problem: Color grading, sharpening, or filters applied inconsistently after generation create style variation.

Solution: If you post-process, create a standardized edit preset and apply identically to every image. Better yet, incorporate post-processing effects into your prompts and generate consistently.

Style Consistency Checklist

Before starting any series, complete this checklist:

Planning Phase:

- [ ] Defined target style with reference images or written description

- [ ] Created style template or style anchors

- [ ] Selected method (template / img2img / LoRA) appropriate for project scope

- [ ] Documented all technical parameters (CFG, steps, resolution, etc.)

- [ ] Generated 10+ test images to verify style stability

Execution Phase:

- [ ] Locked all parameters—no changes mid-series

- [ ] Using identical style descriptors in every prompt

- [ ] Batch generating where possible for consistency

- [ ] Quality checking every 10 images against first image

- [ ] Documenting any drift and adjusting approach immediately

Quality Control:

- [ ] Side-by-side comparison of first and last images in series

- [ ] Color palette consistency check (use color picker tool)

- [ ] Lighting consistency check (shadow direction and intensity)

- [ ] Texture/rendering consistency check (brushstrokes, grain, etc.)

- [ ] Final approval from stakeholder (client, art director, yourself)

The Future of Style Consistency

The AI image generation landscape is evolving rapidly, and style consistency is at the forefront of innovation.

Emerging Technologies:

-

Style Preservation Algorithms: New research focuses on "style vectors"—mathematical representations of aesthetic qualities that can be perfectly preserved across generations. Unlike reference images, these vectors capture style without being tied to specific compositions.

-

Cross-Model Style Portability: Tools are emerging that allow you to train a style in one model (e.g., Z-Image) and transfer it to another (e.g., Midjourney or Flux). This enables workflows leveraging each tool's strengths while maintaining consistent style.

-

Real-Time Style Preview: Imagine adjusting style parameters and seeing results instantly—no waiting for full generation. This is already appearing in tools like Krea AI and will likely become standard.

-

Automated Style Matching: Future tools may analyze an existing brand asset library and automatically generate new assets matching that style without manual training or prompting.

Industry Trends:

- Greater integration of style consistency features into mainstream tools (no more complex ComfyUI workflows)

- Improved reference-based generation with better style understanding

- Brand-specific AI models pre-trained on company visual guidelines

- Style marketplace where creators can buy/sell trained style models

Z-Image continues to invest heavily in style consistency features, with ongoing improvements to its img2img capabilities and LoRA training infrastructure. The gap between professional designers and AI-assisted creators is narrowing rapidly.

Action Plan: Implementing Style Consistency in Your Workflows

Based on everything covered, here's a step-by-step implementation plan:

For Beginners (Starting Today):

- Choose a simple project (e.g., 5 Instagram posts in consistent style)

- Create a style template with 5-7 specific descriptors

- Generate 10 test images, refine template until style is stable

- Generate your series using identical prompts with varied content

- Compare side-by-side and celebrate your consistency

For Intermediate Users (This Week):

- Identify a larger project (e.g., 20-product photography series)

- Create master style reference image via generation or curation

- Set up Z-Image img2img workflow with fixed denoising strength

- Generate series in batches of 5, quality-checking each batch

- Document what worked for future projects

For Advanced Users (This Month):

- Train a custom style LoRA for your brand or artistic identity

- Set up batch generation pipeline with locked parameters

- Create style bible documenting your aesthetic for team reference

- Implement hybrid workflow combining LoRA + img2img + templates

- Build automated quality control checks into your pipeline

Conclusion: Style Consistency is Now Achievable

Gone are the days when maintaining consistent art style across a series required traditional artistic skills or expensive custom training. With Z-Image's img2img capabilities, LoRA training infrastructure, and the workflows outlined in this guide, creators of all skill levels can achieve professional-grade style consistency.

The key is choosing the right technique for your needs:

- Quick one-off projects: Style template method

- Medium series with visual references: Img2img style locking

- Long-term brand work or large series: Custom LoRA training

Start simple, measure your results, and scale up sophistication as needed. The style consistency challenge is solvable—and 2026 is the year it becomes accessible to everyone.

Ready to create your first consistent series? Start with Z-Image's image-to-image feature and build your style consistency workflow from there. For brand-specific guidance, our Z-Image commercial use guide covers legal considerations for professional work.

Your cohesive, visually stunning series awaits. Let's make it happen.

Additional Resources

- Z-Image Turbo Review: Speed vs Quality - Understanding trade-offs in generation settings

- Mastering Z-Image Edit Guide - Post-processing for consistency

- Z-Image Prompting Masterclass - Advanced prompt engineering

- Best AI Image Generators 2026 - Market overview and comparisons

- LoRA Training Explained - Deep dive on custom model training

Sources:

- Artistly AI 2026 – Visual Consistency Challenge

- How to Create Consistent On-Model Product Images with AI

- AI Style Transfer Tools Guide

- Best AI Image Generators for Professionals

- LoRA Training: Perfect Character Consistency

- Z-Image API Reference

- Vintage Comic Style LoRA for Z-Image

- Mastering Z-Image Image-to-Image

- How to write AI image prompts like a pro

- The Best AI Image Generators We've Tested for 2026

- Reddit: Consistent Art Style Between Assets