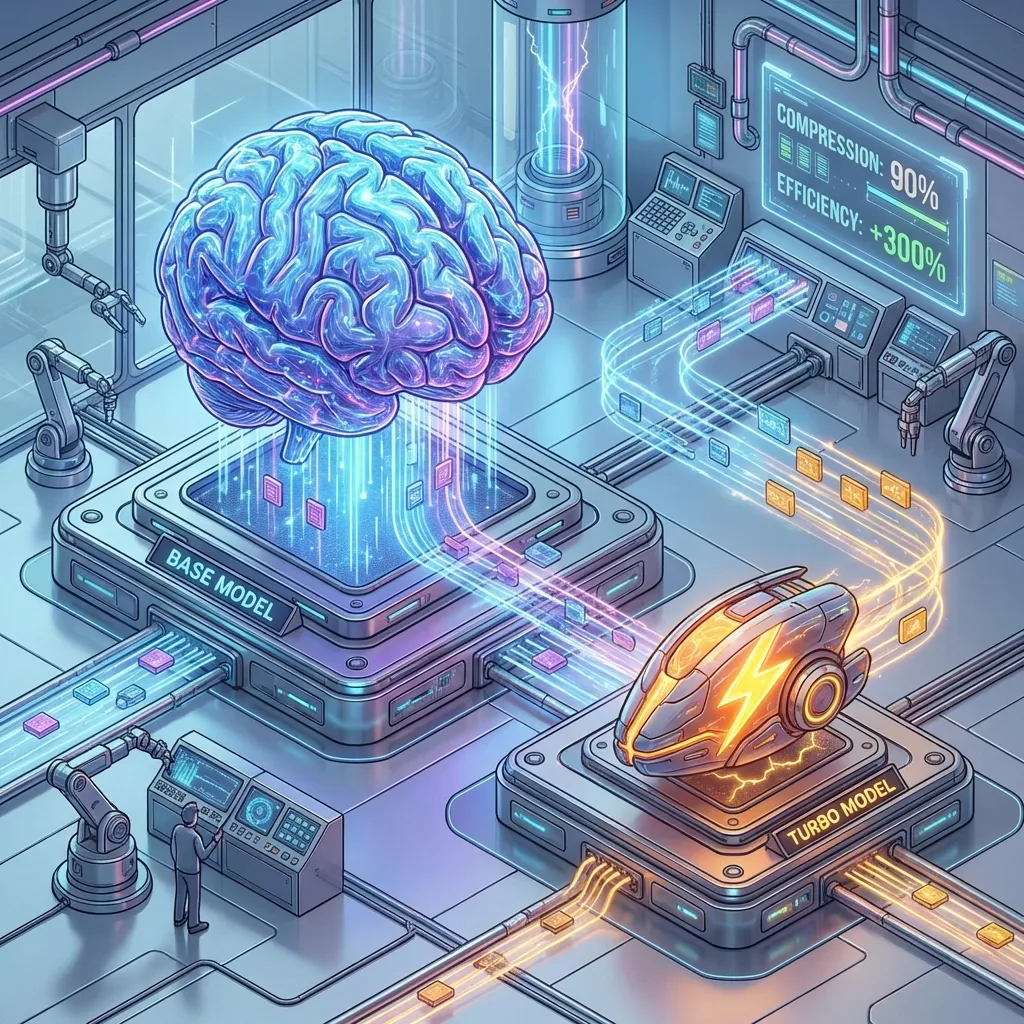

Z-Image Base vs Turbo: The Next Leap in Open Source AI Art?

Description: Z-Image Turbo amazed us with speed, but the community is waiting for the real game-changer: Z-Image Base. Here's why the non-distilled 6B model will redefine fine-tuning and local AI art in 2026.

Author: Dr. Aris Thorne

Date: 2026-01-17

The open-source AI art community is never satisfied for long. Just months after the release of Z-Image Turbo, which shattered inference speed records with its distilled architecture, discussions on Reddit and GitHub have shifted to a singular focus: Z-Image Base.

While Z-Image Turbo currently holds the crown for the fastest high-quality generation on consumer GPUs, seasoned creators know that "Turbo" models, by definition, sacrifice something effectively invisible to the casual user but vital to the pro: plasticity.

In this deep dive, we'll explore why the upcoming Z-Image Base (the non-distilled 6 billion parameter foundation model) is poised to be the most important release of early 2026, comparing it directly to its speedster sibling.

The Distillation Dilemma: Speed vs. Flexibility

To understand the hype, we must understand the difference between these two models. Z-Image Turbo works so famously fast because it is a distilled model. It uses techniques like Adversarial Diffusion Distillation (ADD) or Latent Consistency Models (LCM) to reduce the number of steps required to generate an image from 30+ down to just 1-4.

This compression is brilliant for inference — getting an image in milliseconds — but it "freezes" certain weights. It minimizes the model's internal "thought process," making it significantly harder to steer with external adapters.

Why Base is the "Teacher"

Z-Image Base is the "teacher" model. It hasn't been compressed. It retains the full, dense web of parameters that learned the relationships between concepts.

- Turbo: "Draw a cat" -> Accesses shortcut -> Cat.

- Base: "Draw a cat" -> Understands fur texture, lighting interaction, anatomy -> Cat.

For simple prompting, the result looks distinctively similar. But when you try to teach the model a new concept (Fine-tuning), the Base model has the capacity to learn, while the Turbo model often resists.

Fine-Tuning and LoRA: The True Battlefield

The primary reason discussions on r/LocalLLaMA and r/StableDiffusion are dominating with "Waiting for Base" posts is LoRA (Low-Rank Adaptation) training.

As we discussed in our comparison with Flux and Midjourney, one of the biggest strengths of open-source models is the ability to train them on your own style or characters.

Currently, training a LoRA on Z-Image Turbo is difficult. The distilled nature of the model means it "forgets" fast and struggles to converge on new concepts without destroying its existing knowledge (catastrophic forgetting).

Z-Image Base promises to solve this. With the full 6 billion parameters of the S3-DiT architecture accessible, trainers expect:

- Lower Training Loss: Faster convergence on styles and faces.

- Higher Fidelity: Better details in complex textures (skin pores, fabric).

- ControlNet Compatibility: Dense models interact much better with ControlNets for pose and structure guidance.

When Can We Expect It?

While Alibaba's Tongyi laboratory hasn't given a firm date, community speculation points to late January or early February 2026, possibly coinciding with the Chinese New Year updates.

The Github repository activity suggests the code is ready, and it's likely a matter of final safety alignment and documentation before the weights drop.

How to Prepare

If you are planning to switch to Z-Image Base when it launches, you'll need to prepare your hardware. Unlike the nimble Turbo, Base will be heavy.

- VRAM: Expect to need at least 16GB VRAM to load the model comfortably, or 24GB+ for training.

- Storage: The fp16 weights will likely weigh in around 12-15GB.

- Software: Ensure you have the latest ComfyUI updates. The

Z-Image-Nodesare being updated daily. Check our Local Install Guide for the latest setup steps.

Conclusion

Z-Image Turbo changed the game for serving AI art—making it real-time and accessible. But Z-Image Base is set to change the game for creating it. For the artists, the trainers, and the explorers who want to push the boundaries of what a diode-based brain can dream, the wait is almost over.

Are you sticking with Turbo for speed, or upgrading to Base for power? Let us know in the comments using #ZImageBase.