Z-Image Architecture Deep Dive: Understanding S3-DiT

The landscape of AI image generation is shifting rapidly. While 2024 was dominated by the rise of Flux and the continued evolution of Midjourney, a new contender has emerged from Alibabas Tongyi Lab: Z-Image.

What makes Z-Image different isnt just its 6 billion parameters—its the underlying architecture. In this deep dive, well explore the Scalable Single-Stream Diffusion Transformer (S3-DiT) architecture that powers Z-Image, explaining why it might just be the most efficient and scalable approach weve seen to date.

The Problem with Dual-Stream Architectures

To understand why Z-Image is revolutionary, we first need to look at the status quo. Most current Diffusion Transformers (DiT), including competitors like Flux, utilize a dual-stream architecture.

In a dual-stream setup, the model processes text prompts and visual data separately before fusing them. While effective, this separation creates redundancy and computational overhead. The data has to "travel" further and undergo more complex merging operations to influence the final image.

Enter S3-DiT: The Power of Single-Stream

Z-Image fundamentally changes this by employing a Single-Stream approach.

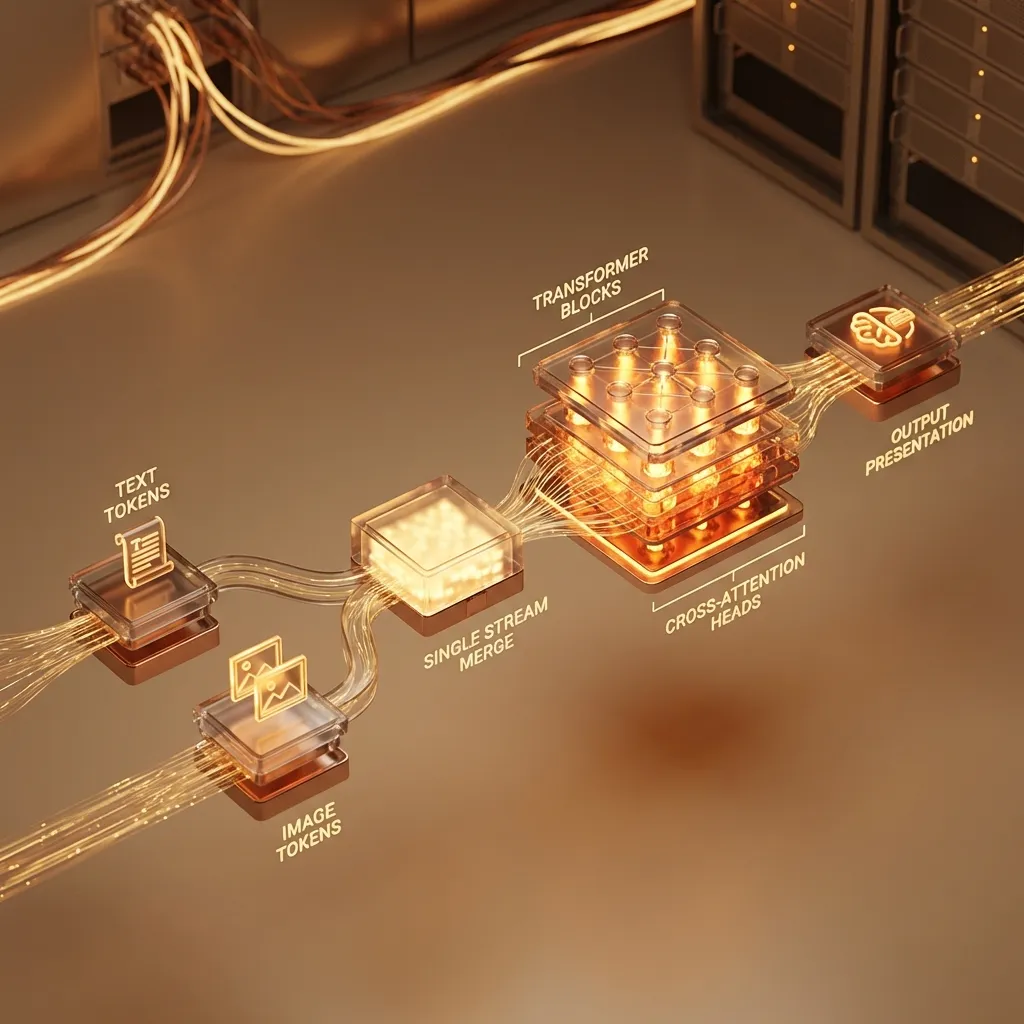

S3-DiT (Scalable Single-Stream Diffusion Transformer) treats text tokens and image VAE tokens as a unified sequence of data from the very beginning.

Instead of two parallel rivers that eventually meet, S3-DiT is one massive, efficient river. This allows for:

- Maximum Parameter Efficiency: Every parameter in the model works on both text and image understanding simultaneously.

- Stronger Semantic Alignment: The model doesnt need to "bridge the gap" between text and image; they are already in the same space.

- Bilingual Mastery: Thanks to this unified token approach, Z-Image excels at rendering both English and Chinese text, a feat where many western models struggle.

Z-Image-Turbo: Speed Without Sacrifice

The efficiency of S3-DiT shines brightest in Z-Image-Turbo. By distilling the massive 6B parameter base model, the team created a version that is not only lighter but incredibly fast.

Z-Image-Turbo can achieve sub-second inference latency on H800 GPUs and runs comfortably on consumer-grade cards with 16GB VRAM. This makes it a perfect candidate for local installation, as detailed in our Open Source Guide.

For creators, this means you can iterate on prompts faster, experiment with complex editing workflows, and generate high-resolution assets without waiting minutes for a single render.

Why This Matters for the Future

The move towards single-stream architectures like S3-DiT suggests that the future of AI isnt just about "bigger" models, but "smarter" architectures. Z-Image proves that you can achieve state-of-the-art photorealism and instruction adherence without merely throwing more compute at the problem in a brute-force manner.

Whether you are a developer looking to fine-tune the Z-Image-Base model or an artist wanting to leverage Z-Image-Edit for precise control, the S3-DiT foundation ensures you are working with one of the most advanced tools available in 2026.

Ready to try it out? Check out the LTX-2 page for more cutting-edge video models that are also pushing the boundaries of efficiency.