ComfyUI Performance Crisis: How to Fix the 2-Minute Lag That's Killing Your Workflow

You've been there. You load up ComfyUI, ready to generate some stunning images with Z-Image Turbo or your favorite model. The first few prompts blaze through in 9-12 seconds. Then, without warning, everything grinds to a halt. Your next prompt takes 2 minutes. Or 3 minutes. Or worse—it freezes completely.

This isn't just frustrating. It's killing your creative workflow. And here's the worst part: you're not alone.

The Reddit Reality Check

A recent thread on r/ROCm exposed this exact problem. A user with an AMD RX 7900 XTX reported that ComfyUI's Z-Image Turbo workflow generated images in around 9 seconds with the default prompt. But the moment they changed even a single word in their prompt, generation times ballooned to 2 minutes. The only fix? Restarting ComfyUI entirely, which temporarily restored the 9-second speed—until the next prompt change.

This isn't isolated to AMD GPUs. NVIDIA users, Apple Silicon owners, and Windows/Linux users across the board are hitting similar walls. The issue cuts to the heart of how ComfyUI manages memory, and understanding it is the first step to fixing it.

What's Actually Causing the Lag?

The 2-minute lag isn't a bug in your workflow. It's a symptom of ComfyUI's smart memory management system working against you in specific scenarios. Here's what's happening under the hood:

Smart Memory: Friend and Foe

ComfyUI introduced a "smart memory" system that optimizes VRAM usage by keeping frequently used models loaded in GPU memory while offloading less-used components to system RAM. This works brilliantly—until it doesn't. When you change prompts significantly (especially with models like Z-Image Turbo), ComfyUI's memory manager may:

- Aggressively cache model weights that no longer match your new prompt's requirements

- Fail to offload unused components, creating VRAM fragmentation

- Trigger system RAM swapping as VRAM spills over, causing massive slowdowns

The result? That 2-minute pause is your system shuffling data between GPU memory, system RAM, and even disk storage.

The Prompt Change Problem

Why does changing a single word trigger this? Z-Image Turbo (and similar models) use different computational paths based on prompt complexity. A simple prompt might use cached weights efficiently. A more complex prompt forces ComfyUI to load different model components, which collide with existing cached data. The memory manager struggles to reconcile these competing demands, leading to the freeze.

The 2026 Fix: ComfyUI Has Evolved

Here's the good news: ComfyUI v0.8.1 (released January 8, 2026) shipped with significant performance improvements that address these exact issues. If you're running an older version, updating alone might solve your problem.

Key fixes in v0.8.1:

- Fixed FP8MM offloading performance issues (a major culprit in lag spikes)

- Added PyTorch upgrade warnings to ensure you're using CUDA 13.0 for maximum speed

- Improved memory estimation for better VRAM allocation

But even with the latest version, you may need additional tuning. Here's your complete fix toolkit.

Immediate Fixes: Startup Flags That Work

ComfyUI includes several command-line flags that directly address memory-related lag. These are your first line of defense.

Disable Smart Memory

The most direct fix for the 2-minute lag is to disable smart memory management entirely. This forces ComfyUI to offload models aggressively to system RAM instead of trying to keep them in VRAM.

For Windows (NVIDIA GPUs):

Edit your run_nvidia_gpu.bat file and add the flag:

python main.py --disable-smart-memory

For macOS/Linux:

python3 main.py --disable-smart-memory

Trade-off: You'll see slightly slower generation times (typically 15-20% slower) because models load from system RAM instead of staying cached in VRAM. But you'll eliminate those 2-minute freezes, and your performance will be consistent regardless of prompt changes.

Low VRAM Mode

If you're running with limited GPU memory (8GB or less), enable low VRAM mode:

python3 main.py --lowvram

This splits the model into smaller chunks that load sequentially, reducing peak VRAM usage at the cost of modest speed reduction.

Reserve VRAM

If your system is stealing GPU memory for display/other tasks:

python3 main.py --reserve-vram 2

(Replace 2 with the number of GB to reserve.)

This prevents your OS from competing with ComfyUI for GPU resources, eliminating stuttering caused by display driver interference.

Advanced Optimization: GPU-Specific Solutions

Different GPUs require different approaches. Here's what works best for your hardware.

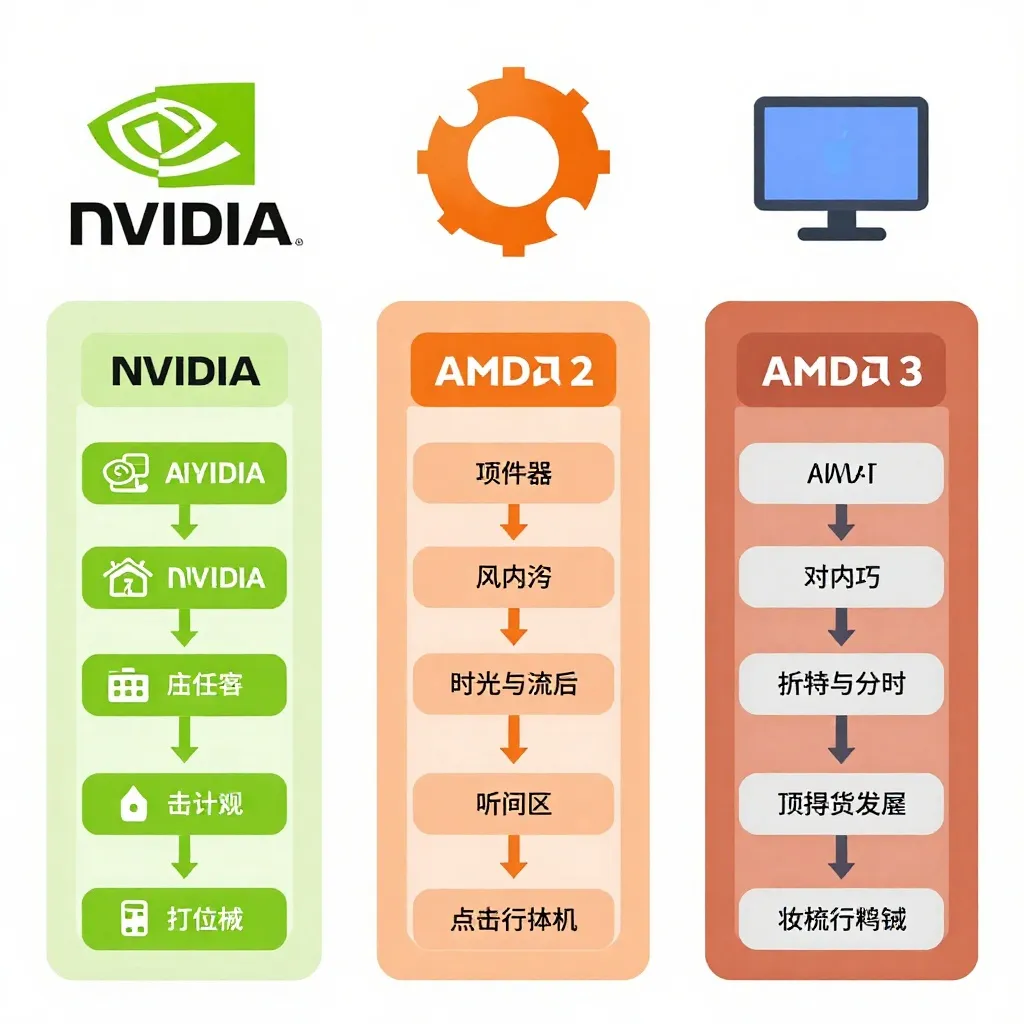

NVIDIA GPUs (RTX 20/30/40/50 Series)

NVIDIA users have access to the most advanced optimization options. Recent updates to ComfyUI introduced three major performance boosts:

1. NVFP4 Quantization (Blackwell/RTX 50-series only)

If you're using an RTX 5090, 5080, or 5070 Ti, ensure you're running PyTorch built with CUDA 13.0. This unlocks NVFP4 acceleration, which provides up to 2x faster sampling compared to FP8. However, if you're on an older PyTorch version, NVFP4 models can actually run 2x slower—so check your PyTorch version first.

2. Async Offloading (All RTX GPUs)

Enabled by default in recent ComfyUI versions, this feature allows model offloading to happen in the background while the GPU continues processing. It's invisible but effective—ensure you've updated to ComfyUI v0.7.0 or later.

3. Pinned Memory

This optimization pins system RAM pages to prevent them from being swapped to disk during model loading. Also enabled by default in recent versions.

NVIDIA Optimization Checklist:

- [ ] Update ComfyUI to v0.8.1+

- [ ] Verify PyTorch CUDA 13.0 (check with

python -c "import torch; print(torch.version.cuda)") - [ ] Use

--disable-smart-memoryif you experience prompt-change lag - [ ] Close browser tabs and other GPU-accelerated apps while generating

AMD GPUs (RX 6000/7000 Series, RX 7900 XTX)

AMD users face unique challenges because ROCm (AMD's CUDA equivalent) handles memory differently. The Reddit user with the RX 7900 XTX? They were hitting ROCm-specific limitations.

AMD-Specific Fixes:

-

Update ROCm drivers to the latest version. AMD's AI Bundle (released January 2026) includes optimized ROCm builds specifically for ComfyUI.

-

Use CPU fallback for text encoders:

Some users report success by forcing the text encoder to run on CPU:python3 main.py --cpu-text-encoderThis adds 1-2 seconds per generation but prevents VRAM-related crashes.

-

Disable hyperthreading:

AMD GPUs sometimes compete with CPU threads for memory bandwidth. In your BIOS/UEFI settings, disable SMT (Simultaneous Multithreading) to reduce contention.

Apple Silicon (M1/M2/M3 Max/Ultra)

Mac users have a different problem: unified memory means VRAM and system RAM are the same. When you hit "lag," you're actually triggering swap to SSD— which is painfully slow.

Mac-Specific Solutions:

-

Use GGUF-quantized models instead of full-precision weights. Q6_K or Q5_K quantization reduces memory usage by 40-50% with minimal quality loss.

-

Monitor Memory Pressure: Open Activity Manager → Memory tab. If the graph is red/yellow, close background apps before generating.

-

Reduce batch size: If you're generating multiple images in one queue, reduce batch size to 1 to prevent swap storms.

Workflow-Level Optimizations

Sometimes the lag isn't ComfyUI's fault—it's your workflow design. Here's how to build lag-resistant workflows:

Avoid Prompt Whiplash

The 2-minute lag often triggers when you dramatically change prompt complexity between generations. If you're jumping from "a cat" to "a photorealistic cat wearing a steampunk helmet, cinematic lighting, 8k resolution, detailed fur rendering, volumetric fog," you're forcing ComfyUI to load entirely different model weights.

Solution: Group similar prompts together. Generate all your simple prompts first, then all your complex ones. This reduces model loading/unloading cycles.

Batch Smart, Not Big

Instead of queuing 20 diverse images at once, batch them by similarity:

- Batch 1: All portraits

- Batch 2: All landscapes

- Batch 3: All abstract compositions

This lets ComfyUI keep relevant weights cached between generations.

Use Model-Specific Workflows

Different models (Z-Image Turbo, Flux, SDXL) have different memory footprints. Some users report that keeping separate ComfyUI installations for different models prevents cross-contamination of cached weights. It's more disk space, but it can eliminate lag.

When All Else Fails: The Nuclear Option

If you've tried everything above and still see 2-minute freezes, these last-resort solutions can help—at a cost.

Full CPU Mode

python3 main.py --cpu

This forces everything to run on your CPU. It's slow (5-10x slower than GPU), but it's consistent and eliminates VRAM-related issues entirely. Use this for troubleshooting to confirm that the issue is GPU-specific.

Downgrade Temporarily

Some users report that older ComfyUI versions (pre-v0.7.0) don't exhibit the 2-minute lag because they use simpler memory management. You can download older versions from the ComfyUI GitHub releases page. You'll miss out on new features, but you'll gain stability.

Switch to Automatic1111 (Temporarily)

Automatic1111's Stable Diffusion Web UI uses a different architecture that doesn't exhibit the same lag pattern. If you have a critical project deadline and can't afford ComfyUI freezes, A1111 can be a reliable fallback. It lacks ComfyUI's node-based flexibility, but it's faster for simple text-to-image workflows.

Once you've completed your project, return to ComfyUI and try the fixes above—many users report that lag issues get resolved in subsequent updates.

The Future: ComfyUI Is Listening

The ComfyUI development team is acutely aware of these performance issues. January 2026's v0.8.1 release was explicitly focused on memory optimization, and the team has hinted at more improvements coming in v0.8.2.

Upcoming features on the roadmap:

- Improved smart memory heuristics that better detect when aggressive offloading is needed

- Per-node memory profiling to help identify workflow bottlenecks

- Optimized LTX-2 support with new weight streaming techniques for long video generation

Your bug reports and forum posts matter. The Reddit thread about the 2-minute lag? The team is watching. Keep reporting issues with detailed system specs, GPU model, driver versions, and workflow files.

Your Action Plan

Don't let the 2-minute lag kill your workflow. Follow this prioritized checklist:

- Update ComfyUI to v0.8.1+ (5 minutes)

- Add

--disable-smart-memoryto your startup script (1 minute) - Test with simple prompts to verify the fix is working

- Monitor VRAM usage with

nvidia-smi(NVIDIA) orrocm-smi(AMD) during generation - If lag persists, try low VRAM mode or GPU-specific fixes above

- Join the discussion on r/comfyui or ComfyUI Discord to share your results

Performance issues suck. But ComfyUI remains the most powerful tool for AI image generation, and these fixes will get you back to creating instead of waiting. The 2-minute lag is solvable—and with the right configuration, you'll be back to 9-second generations before you know it.

Further Reading:

- ComfyUI Workflow Guide - Master advanced node-based workflows

- 8GB VRAM GGUF Guide - Optimize ComfyUI for limited memory

- Z-Image Turbo - Try the model mentioned in this article

- Local Installation Guide - Set up ComfyUI from scratch

Note: This article was updated on January 22, 2026 to reflect ComfyUI v0.8.1's performance improvements. Memory optimization techniques continue to evolve—check ComfyUI's official changelog for the latest updates.