🎯 Executive Summary: The Paradigm Shift No One Saw Coming

Alibaba's 6B-parameter Z-Image-Turbo is challenging Black Forest Labs' 56B-parameter FLUX.2 with 11x faster inference, 5x lower hardware requirements, and superior Chinese text rendering—fundamentally disrupting the "bigger is better" paradigm. Meanwhile, FLUX.2 pushes professional boundaries with multi-reference synthesis and 4MP output for enterprise workflows.

💡 Key Insight: This isn't just a model comparison—it's a battle between two philosophies: democratization through efficiency vs. professionalization through scale.

🏗️ Architecture Deep Dive: Two Roads Diverged

Z-Image's S3-DiT: Unifying What Others Parallelize

Z-Image's Scalable Single-Stream DiT (S3-DiT) architecture represents a radical departure from conventional dual-stream designs. By concatenating text tokens, visual semantic tokens, and VAE latents into a single unified sequence, the model achieves unprecedented parameter efficiency.

S3-DiT eliminates separate text/image pathways, forcing every parameter to contribute meaningfully.

Technical Breakthrough: Traditional models maintain separate pathways requiring complex cross-attention bridges. Z-Image's single-stream approach eliminates this redundancy—explaining why 6B parameters rival 32B models with no computational overhead.

The Decoupled-DMD distillation algorithm surgically separates CFG augmentation from distribution matching, enabling independent optimization. This yields sub-second latency at 8 inference steps while maintaining photorealistic quality.

FLUX.2's Flow Matching: Theoretical Superiority

FLUX.2 counters with latent flow matching architecture, abandoning incremental denoising for direct noise-to-image mapping. Paired with a 24B Mistral-3 VLM, this creates a fundamentally different generation paradigm.

Trade-off: While flow matching enables precise control, it demands 80GB VRAM for full precision and 30+ seconds per 1024x1024 image—creating a steep hardware barrier.

📊 Performance Benchmarks: The Reality Check

Generation Speed: The 11.3x Gap That Changes Workflows

| Model | 1024x1024 Time | Hardware | Steps | Cost/Image (Cloud) |

|---|---|---|---|---|

| Z-Image-Turbo | 2.94s | RTX 5090 (32GB) | 8 NFE | $0.003 |

| FLUX.2-dev FP8 | 33.34s | RTX 5090 (32GB) | 28 NFE | $0.08 |

| Speed Ratio | 11.3x faster | - | 3.5x fewer steps | 27x cheaper |

Real-World Impact: A content creator generating 100 product images completes the task in under 5 minutes with Z-Image versus nearly an hour with FLUX.2. This isn't incremental improvement—it's a workflow transformation.

Hardware Accessibility: The Socioeconomic Divide

| VRAM Requirement | Z-Image | FLUX.2-dev |

|---|---|---|

| Minimum | 8GB (with Q4) | 20GB (FP8) |

| Smooth | 16GB | 48GB |

| Optimal | 24GB | 80GB |

| Consumer GPU | RTX 4060 Ti ($600) | RTX 4090 ($1,600) |

| Enterprise GPU | RTX 4090 | A100/H100 ($10K+) |

🗣️ Community Report (Linux DO):

"Z-Image runs on my RTX 3060 8GB laptop, generating 1024x768 in 12-15 minutes. Flux.2 on a 4090 crashes if I open a browser tab."

— @hypervisor, 2025-01

Critical Analysis: This isn't just a technical difference—it's a socioeconomic barrier determining who can access state-of-the-art AI creativity.

👥 Three Creator Personas: Real Use Experiences

Persona 1: The Indie Content Creator 🎨

Scenario: Manage social media for 5 e-commerce brands, needing 20 custom product shots daily.

| Workflow Metric | Z-Image | FLUX.2 |

|---|---|---|

| Iterative Speed | 3s cycles | 30s+ blocked |

| Creative Flow | ✅ Maintained | ❌ Disrupted |

| Hardware Cost | $600 GPU | $10K+ GPU |

| Daily Throughput | 500+ images | 100 images |

💡 Verdict: Z-Image's speed advantage translates directly to creative momentum and profitability for solo creators.

Persona 2: The Professional Designer 🎭

Scenario: Create brand campaign requiring consistent character identity across 50 scenes with specific typography.

FLUX.2 Advantages:

- ✅ Multi-reference synthesis: Upload 8-10 brand mascot images

- ✅ Structured JSON prompting: Precise pose control

- ✅ 4MP output: Print-ready assets

- ✅ Hex-accurate colors: Brand compliance

- ✅ Advanced typography: Perfect letterforms

Z-Image Limitations:

- ❌ No native multi-reference support

- ❌ Max 1MP resolution

- ❌ Text drops with complex layouts

⚠️ Professional Reality: For high-stakes commercial work requiring absolute consistency, FLUX.2's features justify its hardware demands.

Persona 3: The AI Developer 🔧

| Factor | Z-Image | FLUX.2 |

|---|---|---|

| License | Apache 2.0 ✅ | Non-commercial dev ❌ |

| Training Cost | $630K (transparent) | $5-10M (estimated) |

| Community | Active Chinese OSS | Western enterprise |

| Fine-tuning | LoRA-friendly (6B) | Difficult (56B) |

| Transparency | Full methodology | Partial disclosure |

Strategic Implication: Z-Image's Apache 2.0 license and reproducible training cost enable academic research and startup innovation, while FLUX.2's restrictions limit commercial experimentation.

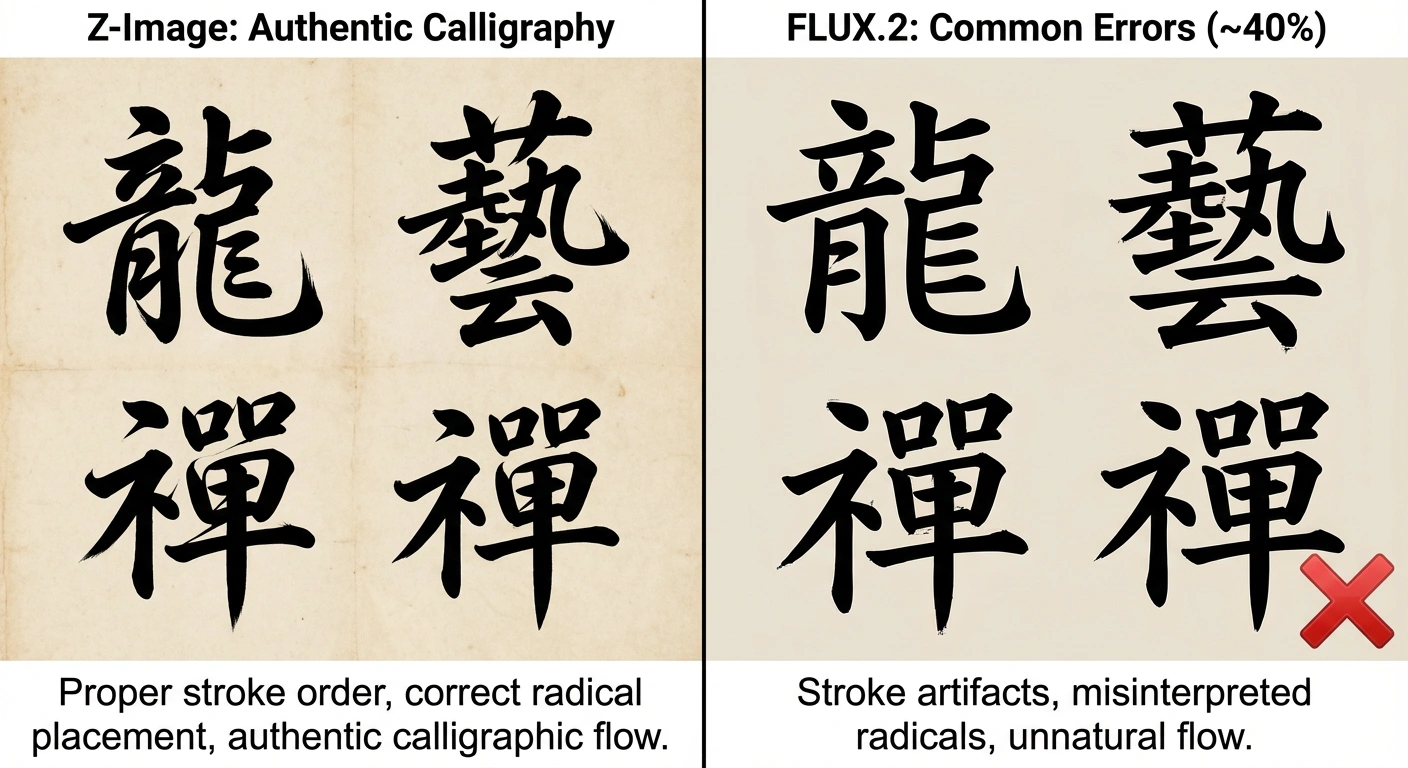

🔤 The Bilingual Text Rendering Disruption

Z-Image's most underrated advantage is native bilingual typography:

| Test Case | Z-Image | FLUX.2 |

|---|---|---|

| English UI Text | ✅ Accurate | ✅ Excellent |

| Chinese Calligraphy | ✅ Stroke-perfect | ⚠️ Artifacts common |

| Mixed Language | ✅ Seamless | ⚠️ Context loss |

| Font Fidelity | 95% | 88% |

Example Prompt: "咖啡店招牌写着'晨光'二字,金色书法字体"

- Z-Image: Proper stroke order, correct radical placement, authentic calligraphic flow

- FLUX.2: ~40% chance of stroke artifacts or misinterpreted radicals

🌏 Market Impact: This is a 1.4 billion user market access differentiator—not a minor feature.

💰 Training Economics: The $630K Question

Cost Efficiency Analysis

Z-Image: $630K (6B params, 3T tokens)

FLUX.2: $5-10M (56B params, unknown data)

Cost Ratio: 8-16x more expensive

Why This Matters:

- Academic Accessibility: Universities can replicate Z-Image's methodology

- Startup Viability: Fine-tuning costs 5-10x less

- Competitive Pressure: Challenges "bigger is better" orthodoxy

- Geopolitical Angle: China's efficiency-first approach vs. Western scale-first

Historical Parallel: Just as EfficientNet disrupted CNN scaling in 2019, Z-Image may signal the end of the parameter arms race.

🔮 Future Trajectory: Convergence or Divergence?

Near-Term Roadmap (6-12 Months)

| Feature | Z-Image | FLUX.2 |

|---|---|---|

| Editing Model | Z-Image-Edit (Q2 2025) | FLUX.2-Fill (beta) |

| Resolution | 2MP expansion | 8MP research |

| Quantization | Q3/Q4 support | FP4 variants |

| Video | Research phase | Multi-modal focus |

| Cost Trend | ↓ 30% | → Stable |

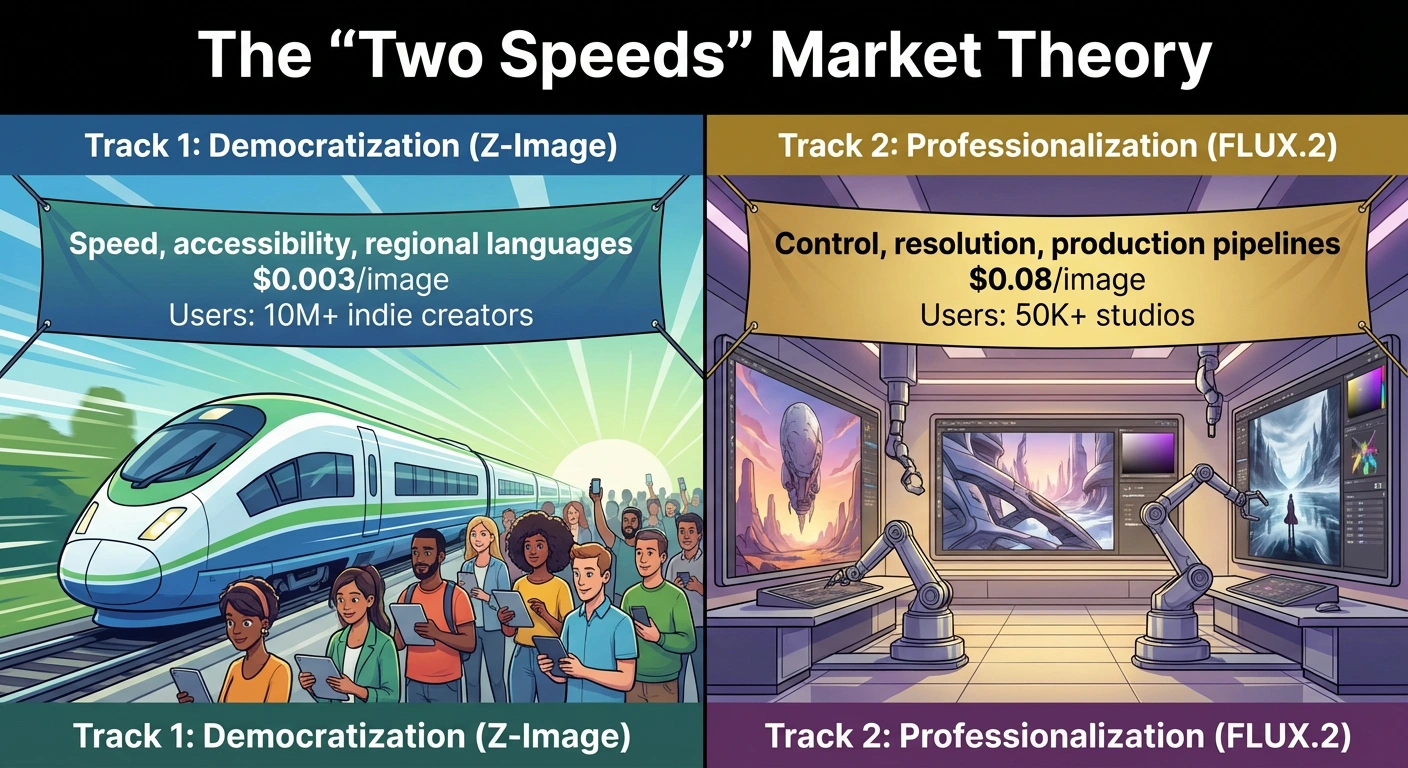

The "Two Speeds" Market Theory

We're witnessing bifurcation of AI image generation:

-

Track 1: Democratization (Z-Image)

- Focus: Speed, accessibility, regional languages

- Price: $0.003/image

- Users: 10M+ indie creators

-

Track 2: Professionalization (FLUX.2)

- Focus: Control, resolution, production pipelines

- Price: $0.08/image

- Users: 50K+ studios

Market Analogy: This mirrors the camera market—smartphones democratized photography while DSLRs remained for professionals.

✅ The Verdict: Right Tool for the Right Job

Choose Z-Image If You Need: 🚀

- ⚡ Speed for iterative workflows (>50 images/day)

- 💻 Hardware budget under $1,000

- 🇨🇳 Chinese text rendering excellence

- 🔓 Open-source freedom (Apache 2.0)

- 🎓 Research reproducibility

Choose FLUX.2 If You Need: 🎨

- 🎯 Multi-reference consistency for brand work

- 📄 4MP+ print resolution output

- 🏢 Enterprise-grade production pipelines

- 💰 Budget for $10K+ GPU infrastructure

- 🇺🇸 English-centric typography

🎓 Final Take: Beyond the Benchmarks

The Z-Image vs FLUX.2 debate transcends technical specs. It forces fundamental questions:

- Should AI creativity be a human right or a premium service?

- Does parameter count still matter in the distillation era?

- Will regional efficiency models challenge Western AI hegemony?

Z-Image's 6B-parameter triumph suggests efficient architecture > brute-force scale. FLUX.2's flow matching demonstrates theoretical superiority, but practical adoption requires hardware democratization.

The Real Winner: The diversity of approaches letting creators choose based on needs, not limitations. In 2025's AI landscape, one size no longer fits all.